It’s tricky but the short answer is yes, sorta. There are certain limitations, of course, but with some creative problem solving, you can do quite a bit with this ETL tool.

But First: What is web scraping?

The general goal of web scraping is to extract data from a website without the use of an API or another type of strict data format. Modern websites are often built on top of databases and other formalized data storage methods, but they are usually intended as a presentation layer for humans to interact with and not for any sort of automated ingestion by machines. The main exception to this is web crawlers, which are a type of web scraping that search engines use to learn about sites and know when to show a page as part of a search. Because it is such an essential service for both sites and search engines, quite a bit of technology has built up around accommodating this particular form of scraping, and efforts are made on the part of crawlers to be respectful of the site’s bandwidth and wishes over what should and shouldn’t be crawled. Beyond that, however, scraping should be treated as more of a last resort. Many sites provide web APIs which provide more efficient, machine friendly formats than a web page, so it’s usually worth it to search the site to see if there is one. Sometimes there can be undocumented ones that exist just to assist in serving the page, but can be leveraged if you’re willing to figure out the details.

Is this even legal?

I claim no true expertise on this due to neither being a lawyer or having consulted a lawyer about this specific issue, but there are a number of legal precedents that have been set for and against, but not much in terms of laws explicitly allowing or forbidding it, so currently it’s considered highly situational. Proponents of scraping have based the idea of “right to scrape” off of the ancient tradition in many countries known as “right to roam” by which individuals are allowed access to private land as long as their activities are not destructive or economically exploitative. This idea translates rather easily into a few simple guidelines for scraping:

- It should be public facing. If you have to log in to access a page, you are going into an area where the data is in some way protected, and there is a much higher chance of scraping data that the site owners or other users don’t necessarily want people freely sharing.

- It should be unintrusive. An individual user generally can’t click around a site fast enough to effect the performance of the site by themselves, but an automated process that constantly accesses thousands of pages can quickly become a problem for the site owner. Modern DDoS detection and blocking are also liable to prevent you from scraping the site at that speed, so it is in everyone’s best interest to be polite and keep a strong cap on how much scraping you do.

- It shouldn’t be copyright infringing. This is as much a matter of the intent as it is the method. In short, sites and their pages are the intellectual property of their owners and subject to those laws. This means that despite many people’s interpretation of fair use, you don’t have the right to scrape pages and reproduce them in whole or in part on your own site. Research however, is covered by fair use, so if you are taking the pages and extracting data from them (word counts, etc.) and only publishing the results of your findings, you should be fine as long as you are following the above guidelines.

The scenario: Recipe Ingredients

I quite like Blue Apron‘s meal delivery service. Getting 3 recipes and all the necessary ingredients to make them each week gave me a gentle push into learning how to cook. After a while, I noticed that recipes pull from a limited pool of possible ingredients that come in specific quantities. That in turn led me to wonder what sort of different analysis could be done with just the recipe ingredients. We’ll be saving the actual analysis for a future post (or more), since like so many analytics projects, gathering and cleaning the data is more than enough to take up a post. Anyways, Blue Apron provides all of their recipes in an easy to access cookbook without any sort of login needed, so it’s sufficiently public facing. Visiting each recipe’s page could become intrusive, but since the use case is just to capture the data once and analyze it, there is no need to schedule this process to run regularly, so as long as it is only scraping one recipe page at a time, it shouldn’t be too much of a strain. The recipes themselves are very much the property of Blue Apron, but as long as the only part of the recipes stored is the ingredients list and the raw pages are not published elsewhere, it shouldn’t be an issue.

The basic strategy

So the idea is simple: The cookbook provides an index of all of the recipes with links to each recipe’s page, which contains a list of ingredients in a specific section on the page. The goal is to find all of the links to recipes (and only the recipes) on the cookbook page, go to the individual page, and read just the section that has ingredients and throw everything else out.

The language primarily responsible for a web page is HTML, which uses a series of tags to structure and format the page. Originally everything in a web page was handled by HTML, but nowadays its duties are split with javascript and CSS, the former handling all interactive aspects of a page and the latter handling all of the visuals and layout. This modern way of making pages is a bit of a double edged sword. On the one hand, CSS adds attribute properties to HTML tags that makes it easier to pinpoint exactly which tags contain the data we’re looking for. On the other hand, javascript’s ability to dynamically change a page’s HTML based on interaction makes it hard to make sure the necessary information is available. You can see this in action when you open up the cookbook with the “Load More Recipes” button on the bottom of the page. The more typical way of resolving this when programming a web scraper is to build what amounts to a very barebones web browser that simulates the necessary user interactions to trigger the javascript before parsing the HTML. This would take some time to figure out even using a general purpose programming language like python with a pile of libraries to assist. Since I am using an ETL tool geared towards data stored in rows and columns, this is a largely insurmountable issue to automate.

Fortunately there is a rather straightforward solution: Go to the page, scroll until there are no recipes left to load, and copy the contents of the appropriate div tag to a file via the inspector. This may sound like a strange thing for someone whose career has been built around automating data processes, but as Randall Munroe of XKCD fame has pointed out before, it doesn’t make much sense to spend a huge amount of time figuring out how to automate something that doesn’t take much time to do, and hitting page down until there is nothing left to load and copying the contents of the appropriate div tag takes a matter of minutes. Development is also a bit easier with a static file instead of working with a web URL. Some further adjustments to the data will be necessary before it can be processed by PDI, however.

Parsing HTML

There isn’t a step in PDI specifically for parsing data out of HTML, but there is an Get Data from XML step. The two standards are similar, with HTML looking like XML using very specific tags. However, HTML is much less strict than XML, and while you can make websites in an XML compliant way (XHTML), it’s not actually necessary to close a number of tags. PDI is particularly strict about closing tags, so running the index file will give an error message about the img tags not being properly closed. Since this is a static file and not being read from a page, it’s once again probably going to be easier to edit the file than try to come up with some sort of logic to work around the restriction. The only thing I am interested in is the links to the individual recipe pages, so I could just do a find and replace in a text editor to remove the img tags altogether. The issue with this idea is that a large part of the tag is the unique file name for the image, so to eliminate all of them would require some sort of regular expression (assuming you’re using an editor like notepad++ that has that functionality) that will only pick up the img tags and nothing else. Fortunately, looking at the tags shows that despite the unique name in the middle, all of the tags end with the same ?width=512″>. So instead of trying to eliminate the tags altogether, replacing ?width=512″> with ?width=512″></img> will close all of the img tags and make them XML compliant. The last formatting caveat is that there must be a single root node when reading from a file, so since I copied the interior of the div tag, I just have to put a tag around everything else. I just called it root since it doesn’t actually have to be an HTML tag.

With the file issues out of the way, now it’s a matter of getting the step set up to read the information we want. The step relies on a query language called XPath to determine what data to extract. In the content tab, if you push the Get XPath nodes button on the right hand side of the window, it will bring up a list of paths. The path selected determines what is considered the root for the sake of parsing out individual rows. Choosing /root will cause there to be only one row (since there is only one root node), so the right choice is going to be one close to the link, which in this case would be root/div/div/a. Going over to the field tab and pressing the Get Fields button will fill in a number of fields automatically, but previewing the rows will show that the URL information is not among them. This is because the Get Fields button only detects nodes below the selected path, ignoring things like attributes, which is where the URL is actually stored. This easy enough to fix as @href is all that is necessary to check the base node’s href tag which contains the URL. With all of the other tags removed, the step can read all of the URLs.

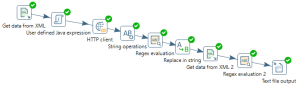

Retrieving the recipe pages

The rows of URLs are still lacking a vital part necessary for retrieving the recipes: they are locally referenced, meaning they don’t have the actual domain the page belongs to. This is easy enough to fix, as there are many steps that could be used to concatenate the domain onto the URLs. The method I find easiest is to use the User Defined Java Expression step with a single expression like “https://www.blueapron.com”+link to produce the complete URL. This new field can then be used in the HTTP client step to retrieve the recipe pages without any further configuration of the step needed. This is where things get complicated (yes, I consider all of the previous steps to be pretty simple) since now the HTML has to be parsed without the luxury of being able to use a text editor to make it XML compliant. A little inspection of a recipe page reveals a couple useful points we can leverage though. The first is that the page comes through sufficiently whole so that no interaction is needed to have get the ingredients. If it weren’t for that, this would be a dead end, and this approach wouldn’t be possible. The other is that the ingredients are all contained within a section tag with a specific class attribute.

Cleaning up HTML with Regular Expressions

If you ask around in most programming circles, responses to the notion of trying to parse XML or HTML with regular expressions will range from unwise to staring directly into the eye of madness. My goal isn’t to completely parse the HTML though, simply extract the specific section of the page with the recipes and remove any extraneous tags that would cause the Get Data from XML step to fail, so there is no need to get more than ankle deep in this dark art. The first step in this is removing any new line characters from the page with the String Operations step since regex evaluators can be inconsistent with how those are handled, so it’s easier just to eliminate them ahead of time. Next is the regex evaluation step. A typical use case for this step is to check if a field matches a regex pattern and returning a true or false, but it has some additional capabilities with capture groups. These allow the step to record the parts of the expression that match the groups to separate fields. What isn’t obvious about this is that all of the capture groups still constitute a single expression that gets a True/False evaluation. This means that I have to make sure that the entire page’s contents match the expression while ensuring that a capture group contains only the ingredients section. After a good deal of trial and error, the strategy was to make 3 separate capture groups. The center one would be the one that started with a section tag and the class name and ended with closing tag. The other two groups would simply match any character that came before and after to make sure that the expression would evaluate true. Using this evaluator’s particular syntax gave the expression (?<beforeSection>.*?)(?<ingredientSection><section class=’section-recipe recipe-ingredients.*?</section>)(?<afterSection>.*?) with the capture groups producing 3 fields named beforeSection, ingredientSection, and afterSection respectively. Just to make sure that everything would consistently match, I also checked Ignore differences in Unicode encodings, Enables case-insensitive matching, and Enable dotall mode on the content tab.

There are still a couple HTML tags that could cause problems with the XML parser that need to be taken care of, namely the img and input tags. Fortunately, the Replace in String step can be used to remove sections of a string that match a regex pattern. By setting it to use Regex, leaving the Outstream field and Replace with columns blank, and to set the empty string, it will simply delete anything that matches the pattern. Most importantly, this works for as many patterns as necessary in as many fields as necessary. From here it is straight to a second Get Data from XML step. The setup is similar to before, but this time the data is actually coming from what is written inside of a node instead of from an attribute. A little investigation in the recipe’s source will show that the XPath Loop for the individual ingredients looks like /section/div/div/ul/li/div, which means that the name of the ingredient is directly in the base node (which in XPath is simply .) but if the default Result Type is set to the default Value Of it will actually remove some of the whitespace and give the ingredient and the quantity in a single field. I want the quantity separate, which is easy enough since it is in a separate span tag. Setting the ingredient to Single Node instead Value Of will return everything within the tag, which includes the entirety of the subtags. Using another Regex evaluation step with capture groups similar to before with (?<ingB>.*?</span>)(?<ing>.*?)(?<ingA></div>) now places the ingredient name in a separate field.

Wrapping it up

At this point it’s just a matter of sending the necessary fields to a text file output. I only included the local URL as a unique identifier for the recipe, the ingredient name, and the quantity as columns in the file. Further improvements could probably be made, such as separating out any units and/or standardizing measurements, but this is sufficient for a great deal of analysis and things like that can probably be done with the file itself. The process runs at a casual pace of about 2 rows a second and takes less than 20 minutes to run through the roughly 2500 recipes currently on the site. As complicated as this may seem, it only took the better part of an afternoon to figure out. Writing all of this out was more time consuming, actually. I’ll be the first to admit that this method certainly has some strong limitations to the sort of pages that can be scraped successfully, but it was an excellent learning exercise full of scenarios that I don’t come across normally when dealing with the more typical databases and spreadsheets. I hope you found it enlightening, and I look forward to sharing some analysis of this data soon.

At this point it’s just a matter of sending the necessary fields to a text file output. I only included the local URL as a unique identifier for the recipe, the ingredient name, and the quantity as columns in the file. Further improvements could probably be made, such as separating out any units and/or standardizing measurements, but this is sufficient for a great deal of analysis and things like that can probably be done with the file itself. The process runs at a casual pace of about 2 rows a second and takes less than 20 minutes to run through the roughly 2500 recipes currently on the site. As complicated as this may seem, it only took the better part of an afternoon to figure out. Writing all of this out was more time consuming, actually. I’ll be the first to admit that this method certainly has some strong limitations to the sort of pages that can be scraped successfully, but it was an excellent learning exercise full of scenarios that I don’t come across normally when dealing with the more typical databases and spreadsheets. I hope you found it enlightening, and I look forward to sharing some analysis of this data soon.