Joseph A. di Paolantonio

Benjamin B. Goewey

Much of the current hype in Data Management & Analytics today is around the concept of Big Data, and Hadoop is at the center of the hype storm. The other two hot areas are Mobile & Elastic Cloud Computing. Cloud is central to both Big Data & Mobile implementations. This blog post will focus on Big Data, and how Datamensional has helped its customers meet this challenge with tools from Pentaho, Microsoft and IBM that work with Hadoop and other NoSQL data management systems.

In 2010 February, I suggested this approach to big data:

“Big data really isn’t about the amount of data (TB & PB & more) so much as it is about the volumetric flow and timeliness of the data streams. It’s about how data management systems handle the various sources of data as well as the interweaving of those sources. It means treating data management systems in the same way that we treat the Space Transportation System, as a very large, complex system.”

Many now flock to the definition of Big Data as three Vs. These Vs were debated hotly throughout 2011, on Twitter, blogs, and journals, with more Vs added. One good example can be found in R. Ray Wang’s article on Forbes:

http://www.forbes.com/sites/raywang/2012/02/27/mondays-musings-beyond-the-three-vs-of-big-data-viscosity-and-virality/

The three Vs on which everyone agrees are:

- Volume – essentially, more data than you are accustomed to handling on your current computing platform, whether that’s Excel on your laptop, Oracle on a *nix box, or SAS on a mainframe

- Velocity – from request a report from IT & wait a week or three, to near-real-time, [undefined, but not really] real-time and streaming data (a.k.a. Continuous Event Processing (CEP))

- Variety – typically defined as structured (Entity Relationship Diagram (ERD) or schæma-on-write modeled data in your standard RDBMS), semi-structured(such as XML] or unstructured (with email, documents & tweets as common examples)

In the article cited above, Ray adds Viscosity and Virality (not to be confused with virility). Viscosity can be seen as anything that impedes the interweaving or flow of data to create insights. Virality – how quickly an idea goes viral on the interwebs – or the rate at which ideas are dispersed across various Internet or Social Media sites (Twitter, YouTube, LinkedIn, Blogs, etc.).

Compare these five Vs to my definition of Big Data, given above; interweaving a heavy volumetric flow of multiple types of complex data from a variety of sources.

There are many sources of Big Data, both internal and external. To name just a few:

– Social Media

– Smartphones

– Weblogs

– Server & Network Logs

– Sensors

– The Internet of Things

Getting tons of TB to PB of data off of one data center into one cloud or vice-versa, or from one cloud to another, is logistically ridiculous. What’s in a cloud, generally stays in that cloud. Getting Analytics closer to the data is paramount for any practical application. Many vendors have recognized this, and over the past few years, the Analytic Database Management System (ADBMS), Hadoop and Cloud *aaS (* being software, infrastructure, platform, or data – as a Service) markets evolving at a pace not seen in decades, with Pentaho, SPSS, SAS, The R Statistical Language, and other analytical software being embedded in ADBMS or Cloud offerings from Teradata/Asterdata, EMC/Greenplum, HP/Vertica, SAP/Bobj/HANA/SybaseIQ, Oracle and IBM/Cognos/Netezza on the one hand, and AWS, MS Azure & Cloudera on the other.

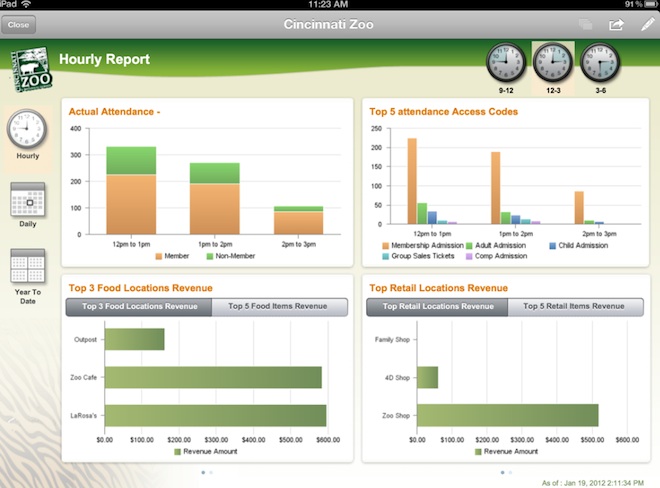

Among others, IBM, Microsoft & Pentaho offer tools to improve Analytics out of Hadoop and other Big Data data sources. This is the most important for Datamensional. Let’s look at one customer case study, using Pentaho Hadoop Data-integration [PHD], Hadoop HDFS & Hive, and 50,000+ rows of data from one cell phone every minute. I had the honour of working with Ben & Gerrit of Datamensional on this project.

The Status Quo: Homegrown reporting & ETL solution written in Java and leveraging some open source tools and libraries to transform cell tower log files into CSV files for loading into an Oracle Datawarehouse with web-based administration & reporting.

The Business Need: Provide exploratory & in-depth Analytics capabilities to customer business analysts on a rich data set that was growing at a mind-boggling pace as Smartphone use opened new avenues for services such as location-based advertising and understanding cell phone user habits. The sample data showed 50,000 records describing a single mobile device usage over a one minute time period.

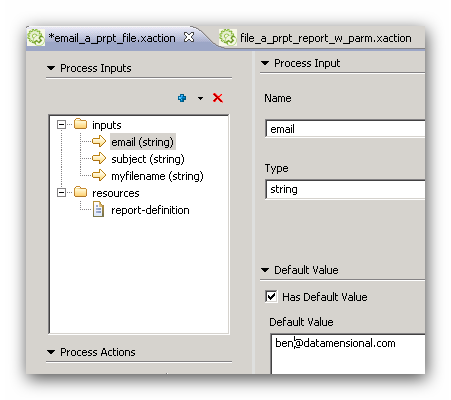

The Datamensional Solution: A Proof of Concept comparing the homegrown solution against Hadoop & Hive with the integrated Pentaho solution for Hadoop, including PHD and the pluggable architecture of the Pentaho BI Server. The PoC provided in-depth solutions in 6 areas:

- Installation, configuration and performance comparisons of using Pentaho Hadoop Data Integration.

- Demonstrating Pentaho’s Plug-in Architecture and use as a BI Platform

- Connecting Pentaho Analyzer to third party OLAP engines

- Demonstrating Pentaho Clustering & Parallel Processing capabilities

- Customizing the look & feel of the Pentaho User & Administration Consoles

- Automating the Installation Process including customizations

The Results: All points of the PoC were exceeded. While all six points are important for developing a Big Data solution or product using Pentaho, the first & fourth points, regarding PHD & Clustering are the most important for Big Data & Big Data Analytics. Other areas of the PoC showed the flexibility of the Pentaho Business Analytics platform for Reporting, OLAP, Data Mining & Dashboards using Pentaho and other solutions, both from the Pentaho Community and as integrated by the Datamensional team.

One amazing result was that replacing the Hadoop libraries and native Hive JDBC with the Pentaho PHD versions improved response time on a simple report from so long that the customer killed the job rather than wait any longer with their homegrown solution, to less than 10 seconds with PHD. Of note, is that the PHD libraries replace the native Hadoop lib directory & files on the Hadoop name node and ALL data nodes. Clustering PDI by installing the lightweight Jetty server, Carte, on each node, parallelizes data integration and increases throughput for Hadoop & Hive. PDI provides many mechanisms to tune the performance of individual transformation steps and job entries, as well as clustering, parallelizing and partitioning for Transformations and Jobs.

The PHD libraries allow PDI and Hadoop to each bring their strengths to the data management challenges of moving, controlling, cleaning and pre-processing extreme volumetric flows of data. Using the native Hadoop libraries, a load of the sample data took over 5 hours. This was reduced to 3 seconds using the PHD libraries and the PDI client Spoon to create transformation and orchestrate the job among the various clusters.

Additional Resources:

TDWI – The Three Vs of Big Data Analytics

Datamensional Resources:

IBM on Big Data

Datamensional’s Big Data Integration Service

Pentaho Big Data Preview

Pentaho Community: Efficiency of using ETL for Hadoop vs. Code or PIG

Microsoft Case Study: MS BI and Hadoop